Adam A Method For Stochastic Optimization Ieee

During a training a method for stochastic optimization Adam algorithm Diederik and Jimmy 2015 is used to minimize l so that can reduce computing and memory. The gradient provides information on the direction in.

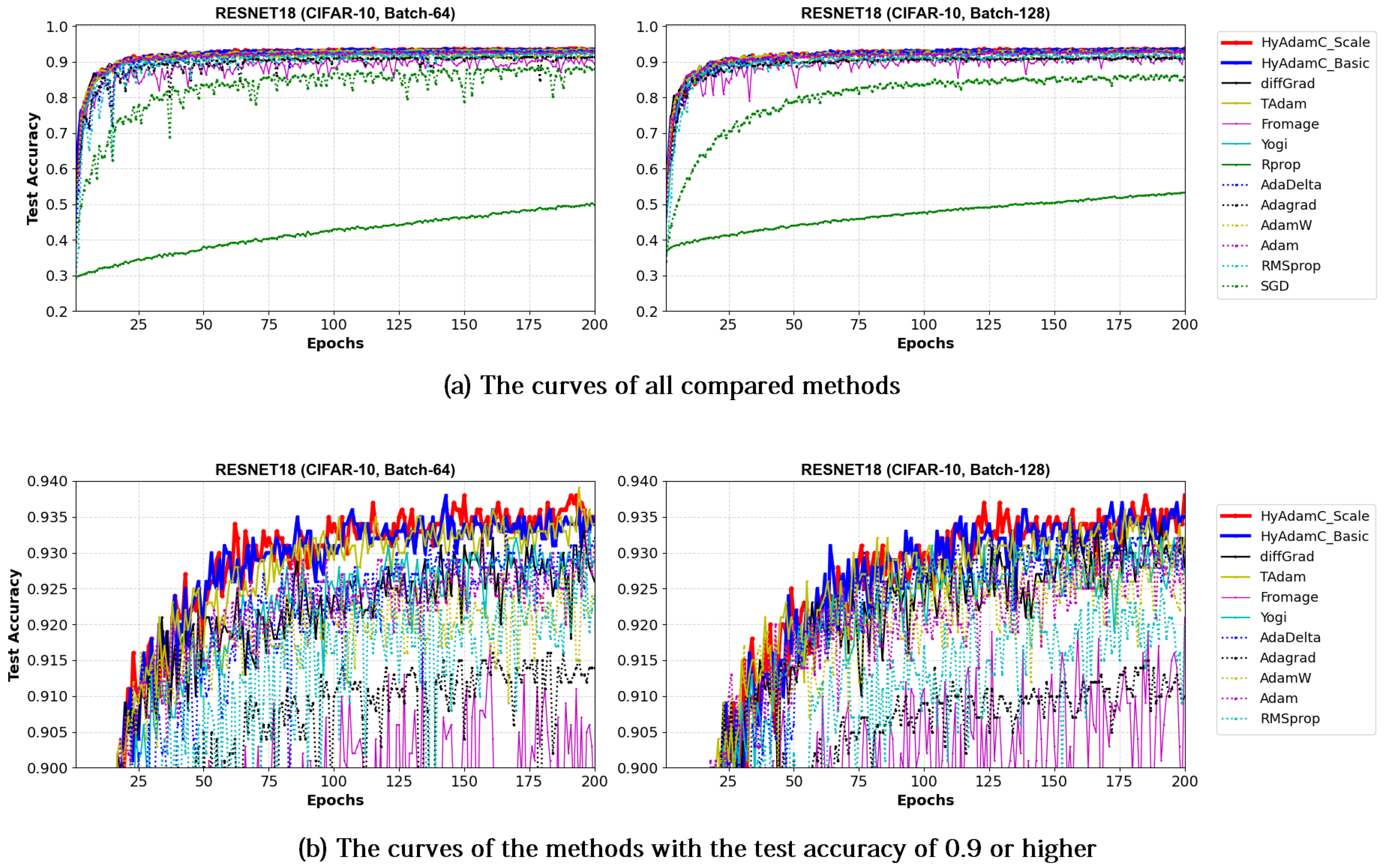

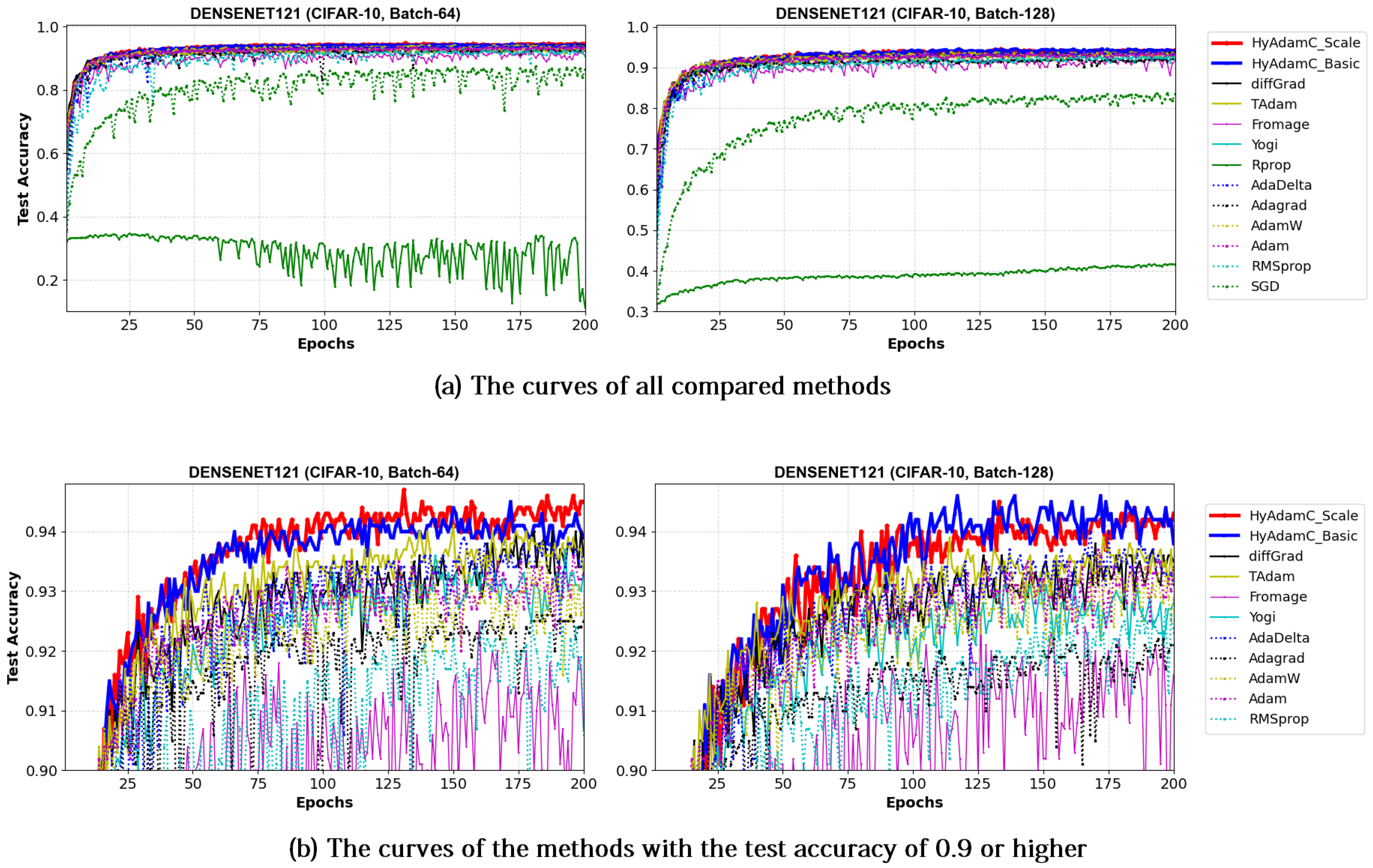

Sensors Free Full Text Hyadamc A New Adam Based Hybrid Optimization Algorithm For Convolution Neural Networks Html

We introduce Adam an algorithm for first-order gradient-based optimization of stochastic objective functions based on adaptive estimates of lower-order moments.

Adam a method for stochastic optimization ieee. 2015 Adam a Method for Stochastic Optimization. We introduce Adam an algorithm for first-order gradient-based optimization of stochastic objective functions based on adaptive estimates of lower-order. The method is straightforward to.

We propose Adam a method for efficient stochastic optimization that only requires first-order gradients with little memory requirementThe method computes. We introduce Adam an algorithm for first-order gradient-based optimization of stochastic objective functions based on adaptive estimates of lower-order moments. CoRR We introduce Adam an algorithm for first-order gradient-based optimization of stochastic objective functions based on adaptive estimates of lower-order.

We introduce Adam an algorithm for first-order gradient-based optimization of stochastic objective functions. We proposeAdam a method for efficient stochastic optimization that only requires first-order gra- dients with little memory requirement. Stochastic gradient-based optimization has core practical importance in many scientific and engineering fields.

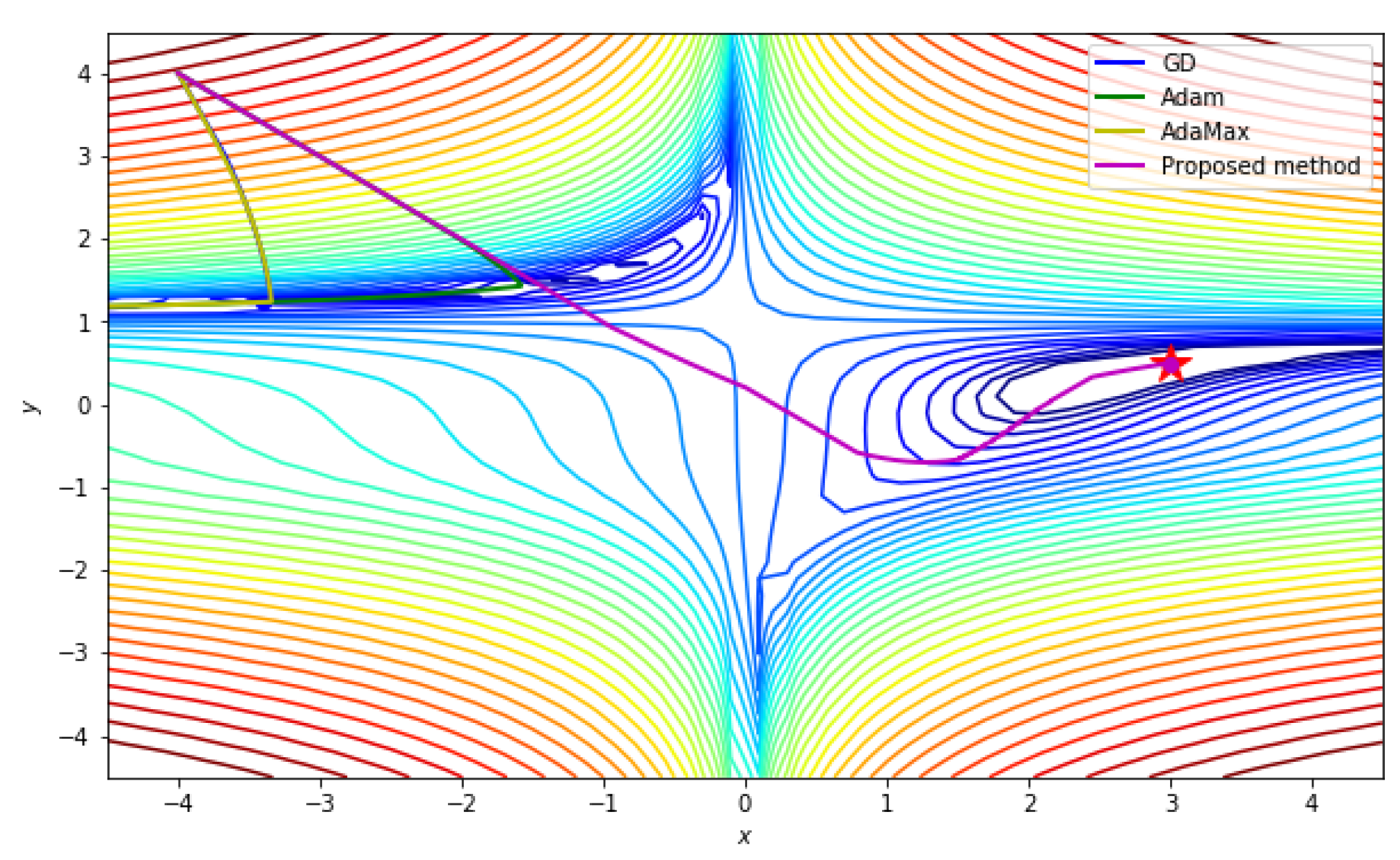

AbstractAdaptive optimization algorithms such as Adamand RMSprop have witnessed better optimization performancethan stochastic gradient descent SGD in some. We introduce Adam an algorithm for first-order gradient-based optimization of stochastic objective functions based on adaptive estimates of lower-order moments. Stochastic compositional optimization generalizes classic non-compositional stochastic optimization to the minimization of com-positions of.

In this paper we propose a stochastic parallel Successive Convex Approximation-based best-response algorithm for general nonconvex stochastic sum-utility optimization. We introduce Adam an algorithm for first-order gradient-based optimization of stochastic objective functions based on adaptive estimates of lower-order moments. Stochastic gradient descent SGD is one of the core techniques behind the success of deep neural networks.

The 3rd International Conference for Learning Representations San Diego. Adam a method for efficient stochastic. We introduce Adam an algorithm for first-order gradient-based optimization of stochastic objective functions based on adaptive estimates of lower-order moments.

We introduce Adam an algorithm for first-order gradient-based optimization of stochastic objective functions based on adaptive estimates of lower-order moments.

Applied Sciences Free Full Text An Effective Optimization Method For Machine Learning Based On Adam Html

Pdf Adam A Method For Stochastic Optimization Semantic Scholar

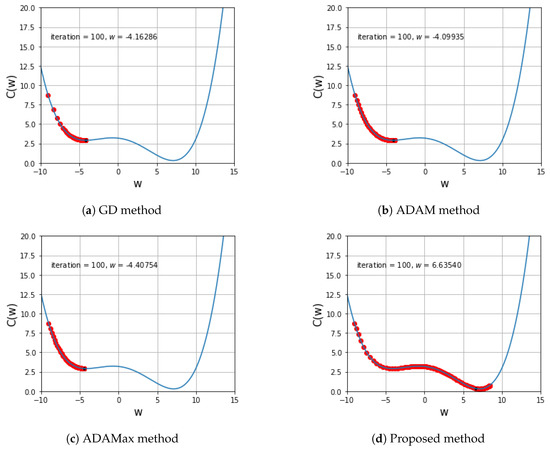

Symmetry Free Full Text An Enhanced Optimization Scheme Based On Gradient Descent Methods For Machine Learning Html

Https Www Mdpi Com 2076 3417 9 17 3569 Pdf

Pdf Adam A Method For Stochastic Optimization Semantic Scholar

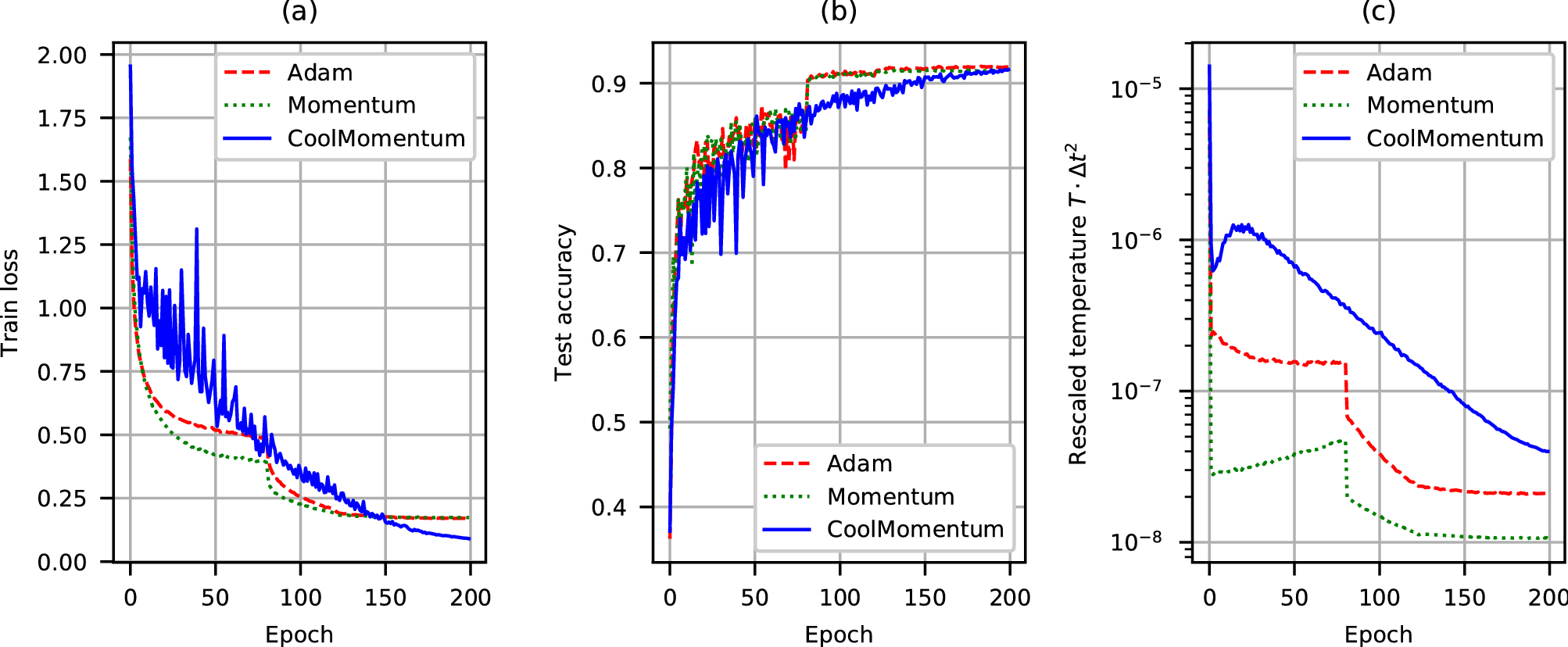

Coolmomentum A Method For Stochastic Optimization By Langevin Dynamics With Simulated Annealing Scientific Reports

Adam A Method For Stochastic Optimization Arxiv Vanity

Sensors Free Full Text Hyadamc A New Adam Based Hybrid Optimization Algorithm For Convolution Neural Networks Html

Symmetry Free Full Text An Enhanced Optimization Scheme Based On Gradient Descent Methods For Machine Learning Html

Pdf Adam A Method For Stochastic Optimization Semantic Scholar

Pdf Adam A Method For Stochastic Optimization Semantic Scholar

Posting Komentar untuk "Adam A Method For Stochastic Optimization Ieee"