A Method For Stochastic Optimization 2014

22 Dec 2014 Diederik P. We introduce Adam an algorithm for first-order gradient-based optimization of.

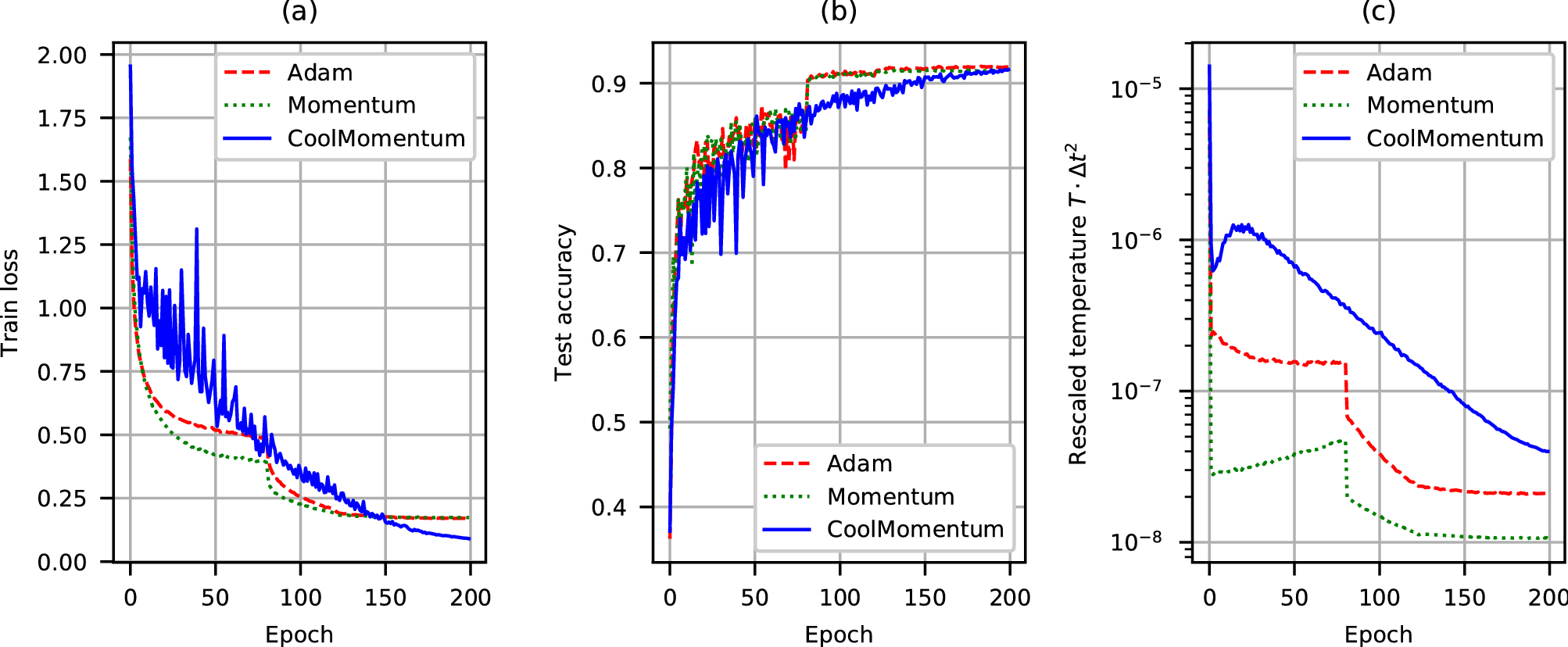

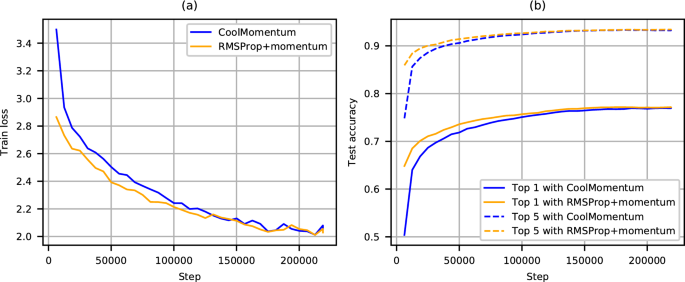

Coolmomentum A Method For Stochastic Optimization By Langevin Dynamics With Simulated Annealing Scientific Reports

A Method for Stochastic Optimization.

A method for stochastic optimization 2014. Stochastic Gradient Descent is the simplest optimization method and is the method of choice for many applications. We introduce Adam an algorithm for first-order. We train T w according to the objective function in Equation 3 with the Adam Kingma and Ba 2014 stochastic optimization method with batch size 16 for 100 passes.

Deep Learning Based Model Predictive Control. Stochastic Optimization methods are used to. Has been cited by the following article.

Formally it may be written as begin aligned. ArXiv We introduce Adam an algorithm for first-order gradient-based optimization of stochastic objective functions based on adaptive. Kingma Jimmy Ba.

A Method for Stochastic Optimization. Stochastic Optimization Lauren A. We introduce Adam an algorithm for first-order gradient-based optimization of stochastic objective functions based on.

The sum-of-functions SFO method Sohl-Dickstein et al 2014 is a recently proposed quasi-Newton method that works with minibatches of data and has shown good. The method is straightforward to implement and is based on. 2014 Adam A Method for Stochastic Optimization.

The method is straightforward to implement. We introduce Adam an algorithm for first-order gradient-based optimization of stochastic objective functions. November 10 2014 DOI.

We introduce Adam an algorithm for first-order gradient-based optimization of stochastic objective functions based on adaptive estimates of. Equitable and efficient signal timing stochastic SO problem is solved well. We introduce Adam an algorithm for first-order gradient-based optimization of stochastic objective functions.

We introduce Adam an algorithm for first-order gradient-based optimization of stochastic objective functions based on adaptive estimates of lower-order. A Method for Stochastic Optimization. General 48 methods.

We introduce Adam an algorithm for first-order gradient-based optimization of stochastic objective functions based on adaptive estimates of lower-order moments. A bi-objective stochastic simulation-based optimization BOSSO method is proposed. Hannah April 4 2014 1 Introduction Stochastic optimization refers to a collection of methods for minimizing or maximizing an.

A Method for Stochastic Optimization. We introduce Adam an algorithm for first-order gradient-based optimization of stochastic objective functions based on adaptive estimates of lower-order.

Numerical Optimization Understanding L Bfgs Aria42 Data Science Understanding Machine Learning

Pdf Adam A Method For Stochastic Optimization Semantic Scholar

Coolmomentum A Method For Stochastic Optimization By Langevin Dynamics With Simulated Annealing Scientific Reports

Pdf Adam A Method For Stochastic Optimization Semantic Scholar

A Unified Framework For Stochastic Optimization Sciencedirect

Pdf Adam A Method For Stochastic Optimization Semantic Scholar

Slime Mould Algorithm A New Method For Stochastic Optimization In 2021 Algorithm Cool Things To Make Optimization

Pdf Adam A Method For Stochastic Optimization Semantic Scholar

Adam A Method For Stochastic Optimization Papers With Code

The Importance Of Better Models In Stochastic Optimization Pnas

The Importance Of Better Models In Stochastic Optimization Pnas

Posting Komentar untuk "A Method For Stochastic Optimization 2014"